It’s very easy to get caught up in what digital marketing experts call “Best Practices.” A best practice is defined as a technique that has been generally accepted as superior because it has produced positive results in a wide variety of circumstances.

A great example of this is the use of video. We all know that videos work better than static images, right? That’s what I used to think until I started split testing static images against videos. The results I saw were pretty surprising, and I’ll share those results with you in a bit.

Another best practice is the use of trust seals. You’ve seen these are various places within the cart and checkout steps.

Another more generalized best practice is the use of promotions. We all know that promotions tend to pull through additional revenue – hopefully incremental revenue that you wouldn’t have achieved otherwise. I’ll show you a surprising example below that didn’t work out so well.

In the daily split testing we do for clients, we try to teach them to always be skeptical and to make no assumptions. Videos, trust seals and promotions generally do work, but the only way to really know for sure is to test all of these ideas so you can quantify the value.

Let’s look at a few test examples where the outcome was quite unexpected.

Test #1: Video vs Static Image

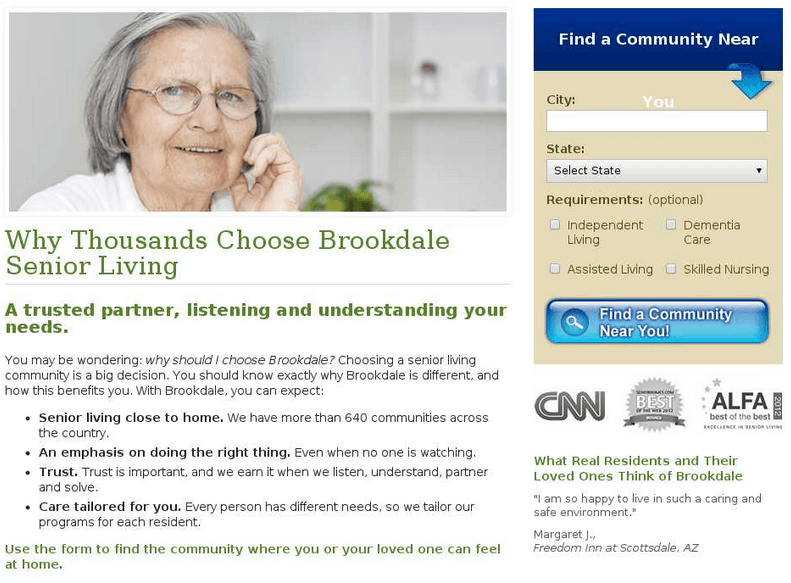

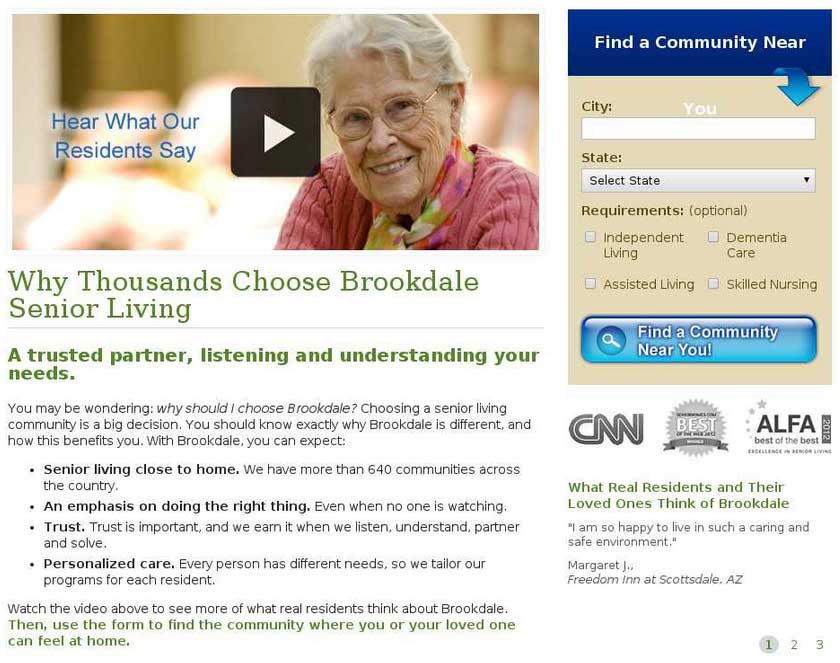

Company: Brookdale Assisted Living

Test Description: This test was set up to determine if a compelling video worked better than a static image in getting more clicks on the “Find a Community Near You” button.

Here was the original page with the static image.

And here was the test variation that used an emotional video with residents being interviewed and saying how much they loved staying at Brookdale.

Test results: The static image recorded a 3.92% improvement in “Find a Community” button clicks, resulting in an additional $106,000 of additional monthly revenue.

After seeing the video, I would have placed my money on the video variation producing more signups. The fact that the static image won out was quite a surprise. It’s a good example of why it is so important to test everything and to not make assumptions about what may work on your site.

Test #2: Use of Trust Seals

Let’s look at an example of using a trust seal on an email signup form. Below is the original signup form. By placing a privacy trust seal near the ‘Submit’ button, this company assumed that visitor anxiety would be minimized, resulting in more form submissions.

Original

In the test variation, the privacy trust seal was removed in order to see the effect on registrations.

Test Variation

Test Results: Quite surprisingly, the seal actually reduced signups. The test variation containing no seal at all produced a 12.6% lift in ‘submit’ clicks.

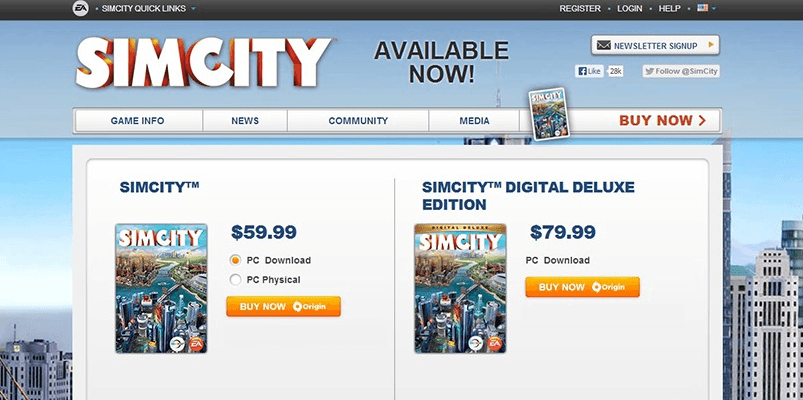

Test #3: Use of Promotions

As a final example, we’ll look at game publisher Electronic Arts. They tested the use of a promotional banner on their popular game SimCity. The promotion offered up a $20 coupon on a future purchase if the visitor pre-ordered the game.

Here was the original page.

Original

Here was the test variation with the promotional banner removed.

Test Variation

Test Results: Electronic Arts was quite surprised to see that the original page with the promotional banner produced a 5.8% purchase conversion, while the test variation without any offer at all converted at 10.2%.

Concluding Thoughts

A/B testing is a very quick and simple way to test everything on your site. The testing team here at Americaneagle.com obtains surprising test results on a daily basis. What works very well on one site may not work at all on another site, even within the same industry.

If you have never conducted A/B testing on your site, give us a call. We’ll be happy to help get you started, including coming up with an initial test idea and running a free pilot test. It’s the easiest way to show the value of bringing a more rigorous and scientific approach to website optimization.