As marketers, we are making changes to our websites all the time, adjusting headlines, changing images, adding buttons, producing more videos, creating special offers, and so on – the list never ends. But how do we know that these changes are actually producing tangible business results? And more importantly, how can we prove a direct correlation between a change we made and revenue or lead gen improvement? The answer is – we can’t, unless we A/B test our ideas. An A/B test is a scientifically controlled experiment we run on a website in order to quantify whether a change we make is actually providing incremental business value.

Let’s take a look at a hypothetical example. Suppose some company decides to incorporate a “120% Price Guarantee” icon onto all their product detail pages. This price guarantee states that if the consumer finds a lower price for the same product at a different merchant, the company will credit 120% of the difference. They add the “120% price Guarantee” icon to all their product detail pages without testing the idea. After the change, they immediately notice an improvement in conversion rate, and assume it was the price guarantee that caused this improvement.

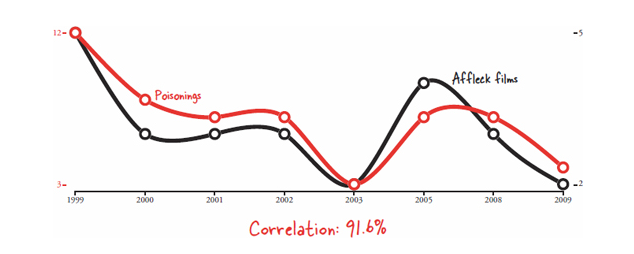

In the testing word, we have the saying: correlation does not imply causation. This is a phrase used in statistics to emphasize that just because there is a perceived correlation in the data between two variables, that does not imply or prove that one caused the other. To take an extreme example, take a look at the below chart from Tyler Vigen’s book Spurious Correlations.

Figure 1: Ben Affleck film appearances vs accidental pesticide poisonings

What the above graph shows is a 91.6% correlation in the data between the number of Ben Affleck film appearances and the number of accidental poisonings by pesticides. This is real data as culled by the Internet Movie Database and the Centers for Disease Control and Prevention. Of course, we know that there is no real correlation between these two variables, but it’s an extreme example of how we can make assumptions about changes on our website based on spurious data.

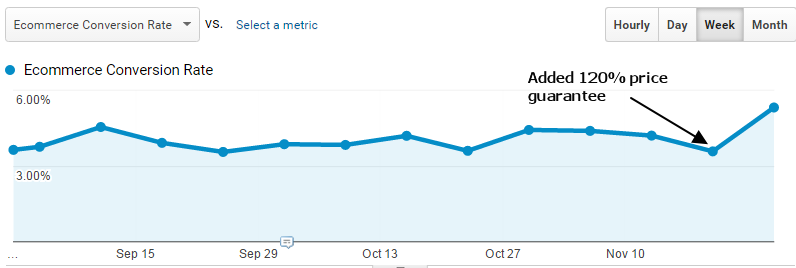

Going back to our original example, if our company adds a “120% Price Guarantee” icon on all their product detail pages and they notice the conversion rate goes up immediately afterword, logic would dictate that the change was the cause of the improvement.

Figure 2: Google Analytics showing a conversion rate spike after a website change

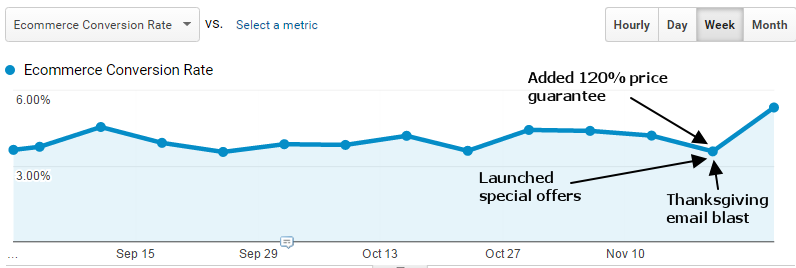

The problem here is that, without A/B testing your ideas, there could be a slew of outside factors that could account for the improvement seen in your web analytics program. It could very well be that the change is causing the improvement, but without testing, you really don’t know for sure. Maybe the cause was that pre-Thanksgiving email blast, or the new offers you started promoting on the homepage.

Figure 3: Without testing, you can't be sure what caused the improvement

By testing the addition of a 120% price guarantee icon, you isolate that factor and can ensure the improvement is really due to that change and not some other outside factor. Let’s see how this works in practice.

Here’s an example of a product detail page from our company.

Figure 4: Original Product Detail Page

And here is what the product detail page looks like after the addition of the 120% guarantee icon.

Figure 5: Same product detail page with 120% guarantee icon added

Our goal is to be able to test the addition of the 120% guarantee icon in order to quantify how it may be affecting transactions and conversion rate. We do this by splitting the traffic to the product detail pages. 50% of the traffic will see the original product detail pages without the 120% guarantee icon, and the other 50% will see the version with the icon. Here is how this looks graphically.

Figure 6: 50% of traffic sees the original page and other 50% sees the test variation

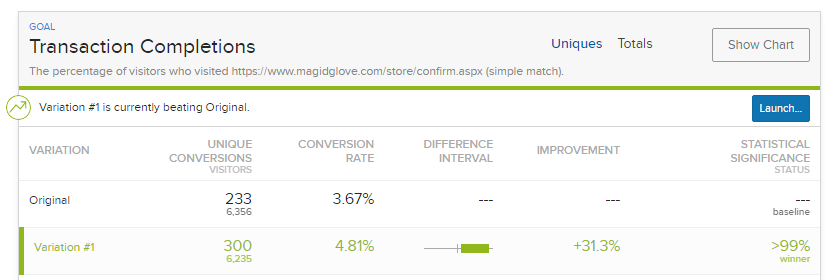

We use a split testing platform like Optimizely or Visual Website Optimizer to set up and run our test. Here are the actual results of our 120% pricing icon test.

Figure 7: The test variation with the pricing icon produced a 31.3% improvement in transactions

As you can see from Figure 7, the variation that added the pricing guarantee icon produced a 31.3% increase in transactions with a 99% statistical significance.

The benefit of testing the pricing icon is that it negates all other marketing efforts that might be going on during the time of testing, allowing you to focus on the specific change and whether that change is creating additional value. This is because any promotional traffic and other outside factors driving traffic to the site will have a 50% chance of getting bucketed into the original and a 50% chance of going into the variation. So, any behavioral changes from these external promotions will affect both the original and the variation, essentially normalizing their effects.

Key Takeaways

We have several takeaways from this article.

- Making changes you your website and the looking at your web analytics tool is NOT a good way to evaluate the effectiveness of the change.

- The only way to understand how a website change is affecting business value is to run an A/B test so that the change can be directly measure against the original page.

- Correlation does not imply causation and the only way to explore the connection between a web page change and improved performance is to run a scientifically controlled experiment (e.g.,A/B test). in addition, the experiment results will allow you project out the additional revenue the test variation will produce over time. By testing, you can calculate the actual revenue improvement of the change

If you’d like to learn more about how A/B testing can help validate your marketing decisions, please visit our A/B Testing section and fill out the form at the bottom of the page.